Raisely is an online fundraising platform for ambitious charities across the world. We recently migrated our hosting and increased our page load speed b 5 x and reduced the computer power needed to serve those pages. Find out how we did it!

How we lowered latency and maintenance with fly.io

About 2 years ago, we shifted Raisely’s hosting to NGINX+Lua, and we did what all the cool kids were doing when you want to scale containers automatically: we ran it in Kubernetes.

In lots of ways, it was great. We were doing things we’d never done before — SSL termination and certificate issuance for custom domains were handled immediately and automatically without dropping a single request, at the same time the key dynamic data for a page was embedded in the response so our React components were ready to paint as soon as our main javascript bundle arrived.

But we’re a team of primarily javascript engineers, and as we grew, we found the issues we encountered pushing the limits of our knowledge of Kubenetes, NGINX and Lua.

Sure, we could’ve invested more time into understanding that part of our stack (one of the great things about our engineering team is that everyone is a bit of a generalist and keen to learn new things), but at the end of the day, we want to be launching features to support our amazing clients. There aren’t nearly as many features we can build with Kubernetes, NGINX, and Lua as we can with Node + React.

So we’ve been shopping around for a platform that would let us stick more with the technology we’re good at, scale better, and still handle custom domains painlessly. Enter fly.io.

If you’ve never heard of Fly, it offers a simple way to deploy apps or containers and scale them globally, and I mean simple.

Tinkering / updating? flyctl deploy

Need more instances? flyctl autoscale set min=5 max=10

Need some instances close to Sydney? flyctl regions add syd

Deployment can be from either an app folder or a container and completes usually in under a minute.

This last one means we can now start working towards very responsive services for clients globally. Since hosting replies require read-only access to our client’s data, we can pair these fly regions with database read replica’s in the same region so they can pull the data they need incredibly quickly.

Fly ticked a lot of boxes for what we needed:

Simple to manage and scale: As you saw above, you specify the minimum and max instances and a soft and hard limit for the number of concurrent connections an instance can receive.

SSL Termination & Certificates: In its default configuration, Fly terminates the SSL connection before forwarding the request to our server so that’s one less thing for us to build and maintain — we use their GraphAPI to allow admins to configure custom domains from the Raisely Admin.

We were able to get it up and running pretty fast, we built a node app that connects to our read replica’s to compile and serve up our client’s pages, and adding in one last feature has really completed the package:

On our old hosting platform I’d experimented with micro-caching and had been really pleased with the results so wanted to apply that to the new platform. We could’ve built this part in node too, but this is something that NGINX is really suited to. Why re-invent a caching layer in the node when we could just drop an NGINX server with a fairly standard config in front?

So we built a custom docker container to put NGINX in front of Node in each container. Yes, this is cheating. If we were following best practice we’d have separate NGINX containers that would scale independently and sit in front of the node containers. We may well do that one day as we scale, but for now, that’s a lot more to manage and coordinate and this cosy container that they share works nicely, and has brought our response time down a lot.

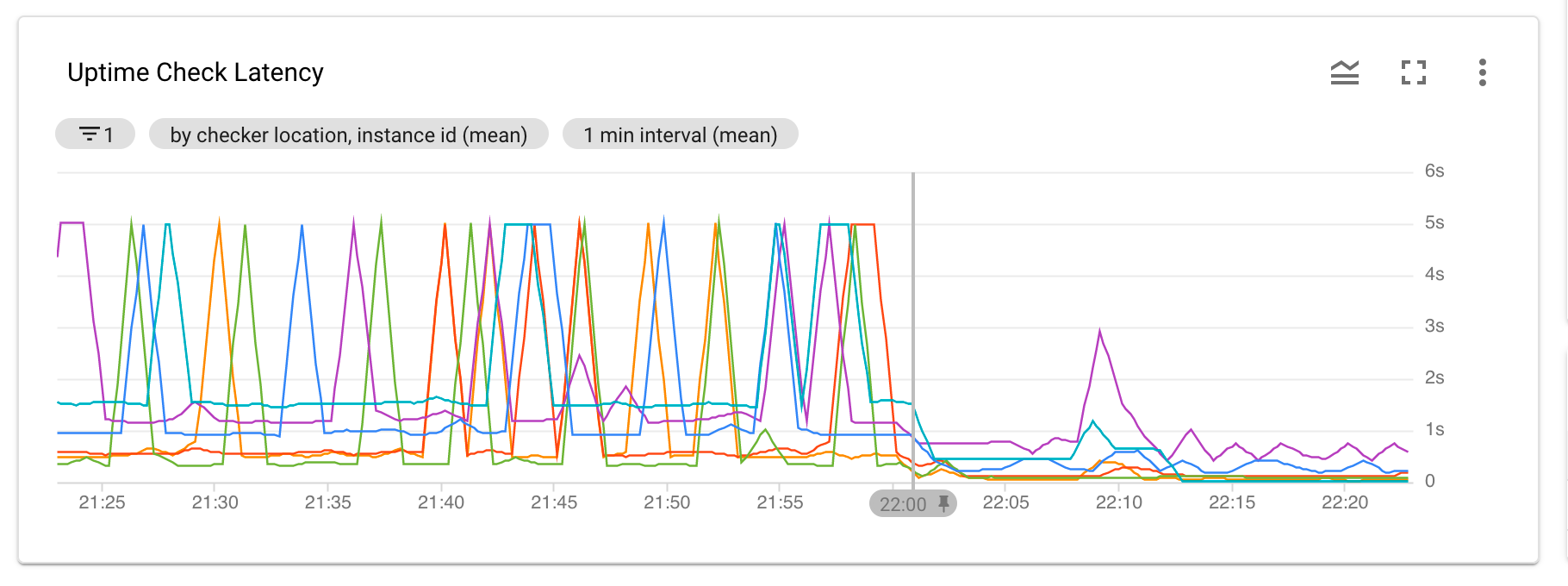

Before and after the changeover to our new fly.io hosting

Could we have done this with our existing NGINX, Lua, Kubernetes setup? Sure. Could we have done it in the same amount of time and the same level of maintenance? No way.

The Setup

So how did we create this franken containiner that’s yielded such fast response times?

Let’s go through the basic setup. First our Dockerfile, based on nginx-alpine where we add in support for node, and tini which we’ll use to reap and restart the two children (nginx & node) if either crashes.

The Docker container (Dockerfile source) launches a short batch file that merges the current environment into the nginx.conf and then launches NGINX & Node.

The final piece is nginx.conf. After our previous experience with NGINX + Lua we wanted to keep it simple, just proxy & cache.

This nginx.conf linked above is an abridged version and you’ll see some variables being set that are later used in the proxying. In our full configuration, we use these to forward some specific requests to alternative services that handle those requests. Often because those services are cloud functions on shared IP’s we need to also set the correct host the proxy uses so the request can be routed correctly.

One important thing to note is the cache key which can cause you pain if you don’t configure it correctly. NGINX will only ever receive HTTP requests because Fly terminates SSL. However as we want to force SSL, our Node server redirects non-SSL requests. So we need to use the x_forwarded_proto header (not $schema) as part of the cache key, or else a non-SSL request will come in, get redirected, that redirect will be cached, and then used when the browser follows the redirect causing an infinite loop.

The only other file you’ll need is your fly.toml which flyctl generates when you get started. Our file is standard, except we’ve added a health check URL, and tweaked hard and soft limits in service.concurrency section. We figured out the best values by load testing the container to see when it needed to trigger scaling. We ended up setting our soft limit low and hard limit high.

And that’s it, the only ingredients we needed (aside from the Node app itself) to build and deploy our fly edge container.

Ready to host a fast-loading landing page for your fundraising campaign? Get started today!

Ready to create your

next campaign?

Technical Lead at Raisely